The popular perception is that bots are tools that hackers employ to perpetrate all manners of IT evil. In reality, bots are often used by organizations and their partners to easily share access to data such as pricing on a Web site.

To give IT organizations more control over the data that bots can access, Akamai today unfurled an Akamai Bot Manager service that gives IT organizations control over the data bots are gathering off a Web site.

Renny Shen, senior product marketing manager for Akamai, says many IT organizations overlook the impact that bots can have on both Web site performance and the business in general. The Akamai Bot Manager service provides those IT organizations with more granular control over which data any given bot can access. In addition, Shen says IT organizations can opt to reroute those data requests to a specific application programming interface (API) versus scraping that data off the Web site.

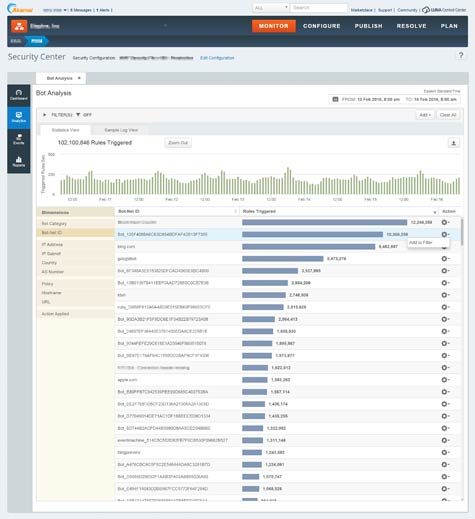

At the same time, Shen says the Akamai Bot Manager service includes a reporting tool that gives IT organizations visibility into how much of their Web traffic is being generated by bots. On average, Shen says bots account for about 30 percent of traffic on any given site, but Shen says one customer was chagrined to discover that 70 percent of their traffic was being generated by bots.

Akamai, says Shen, has a vested interest in helping IT organizations better protect Web content because if it’s easily stolen, most organizations will not continue to invest in developing rich content that would need its content delivery network (CDN) service.

Naturally, bots, like any other application, are in and of themselves not necessarily evil. Many organizations, for example, routinely use them to share data with partners. But like any other IT tool, bots can also easily be used for nefarious purposes. The difference between good and evil usually comes down to how effectively that technology is managed.