By almost any definition, the Internet of Things is an exercise in distributed computing at scale. Sending small chunks of data across a wide area network to be processed in some central repository not only doesn’t make much sense because most of that data is meaningless, but the cost of actually doing that is often prohibitive. For that reason, as much processing as possible needs to be done either on the embedded device itself or the nearest available gateway.

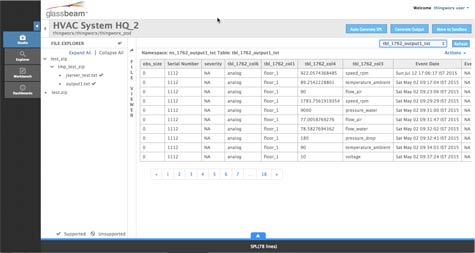

To help IT organizations accomplish that goal, Glassbeam has unveiled Glassbeam Edge, a lightweight version of its IoT software that can be used to ingest, parse and analyze unstructured data in close proximity to the embedded device. At the same time, Glassbeam is making available Glassbeam Studio, a data preparation tool that automates much of the manual processes associated with converting raw machine data into a format that generates actionable intelligence the business can actually use.

Glassbeam CEO Puneet Pandit says that while there’s naturally a lot of interest in IoT, many organizations are underestimating how long it will take for them to stand up an IoT application. In most cases, it’s a $1 to $2 million project that takes six to nine months, says Pandit. Even then, the data being collected has to be normalized in a way that makes it possible to compare and contrast it with other data sources. The result is a lot of effort to cleanse data that would be unnecessary if more of that process was actually automated as close to the IoT devices as possible.

Machine data in and of itself is not generally all that useful. Most businesses are trying to identify anomalies and patterns in that data that indicates some change that might be significant. In other words, what matters most in IoT environments is not the machine data but rather the analytics being generated using that data. Saving massive amounts of machine data doesn’t add much value when the embedded device in question is generating a steady stream of data about its status that is essentially unchanged for weeks at a time. The analytics, on the other hand, provides the baseline from which all future anomalies might be identified.

For most IT organizations, IoT applications represent a brave new world. But in many respects, the fundamental principles of distributed computing still apply; it’s just now occurring at a much grander scale.