While Docker containers are all the rage, stitching them together to deploy them in a production environment remains a challenge. To help address that issue, Docker created a network application programming interface (API) to connect various Docker containers together.

Today, Weaveworks, via the release of Weave Net 1.4, is announcing that it is using that API to simplify connecting Docker containers together using “micro routers.” Rather than relying on a central database to keep track of which Docker containers are associated with one another, Weaveworks has created what amounts to a couple of megabytes of code, using the Go programming language, that can be embedded inside a container. Via those micro routers, Weaveworks COO Mathew Lodge says an organization can more easily build applications at scale using Docker containers.

To help facilitate that process, Weaveworks today also announced that it has joined the Cloud Native Computing Foundation (CNCF), a consortium of vendors dedicated to accelerating the adoption of containers and microservices architectures.

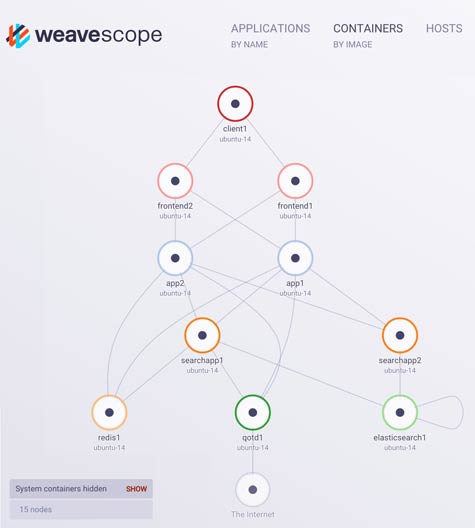

Lodge says Weaveworks developed micro routers as part of a portfolio of tools designed to make it easier to visualize and manage Docker containers. While containers in general are viewed as a boon to application developers, the sheer number of containers that can be spun up and down at a moment’s notice is likely to overwhelm most IT operations teams.

While Docker containers remain largely confined to application development environments, most IT operations teams will be somewhat shielded from their use. But as applications built on top of Docker containers get deployed in production environments, IT operations teams will need tools capable of monitoring and managing containers running on top of bare-metal servers, virtual machines and platform-as-a-service (PaaS) environments.

For the most part, that will mean adding new tools on top of an existing panoply of tools that most IT organizations already find dizzying to keep track of on any given day. The good news, at least, is that once containers do arrive in production environments, IT operations teams will discover that as a method of virtualization, containers are much more efficient. That should theoretically result in exponentially higher utilization rates across a fewer number of physical servers.