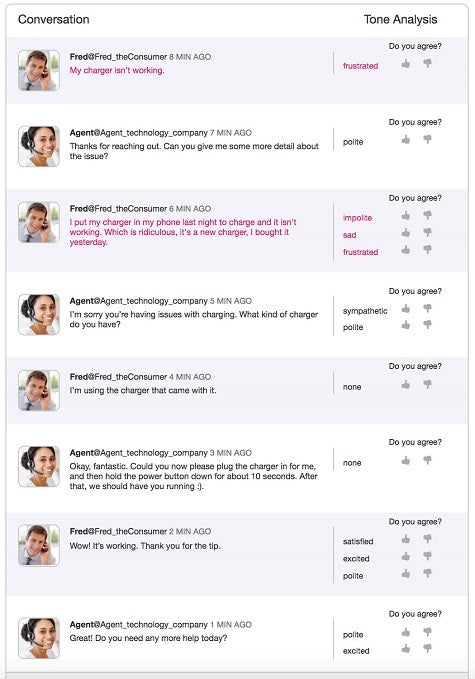

One of the criticisms leveled at the usage of bots in, for example, customer service engagement is that underlying algorithms used to create the bot don’t understand tone and context. To address that specific issue, IBM this week announced it has refined the Tone Analyzer application programming interface (API) for the IBM Watson platform to better support customer engagement applications.

Rama Akkiraju, distinguished engineer and Master Inventor for IBM Watson User Technologies, says Watson-based bots will soon better understand the tenor and tone of the conversation they are being engaged in, including being able to detect satisfaction, sadness, frustration, and the level of politeness.

“We’re on a journey to add compassion to conversational systems,” says Akkiraju.

Akkiraju says those conversations can be either be text- or voice-based. In some cases, Akkiraju says, people might even prefer to engage with a bot than a human when, for example, they don’t want to engage a human to ask what might seem like a dumb question. In other scenarios, Akkiraju says customer service executives might also want to monitor the tone of bot conversations to figure out when the right time might be to have a human take over the engagement.

Most organizations, notes Akkiraju, don’t have the resources required to fully staff a call center. After all, that’s the primary reason people spend so much time on hold waiting for their turn in a queue. Bots allow organizations to reduce the size of those queues by providing a means to more efficiently resolve routine calls in a way that still engages the customer.

It’ll be interesting to see over time how many people will prefer to engage with a bot rather than a human. Perhaps even more interesting will be the number of people that will be able to tell the difference.