Thanks to the commercialization of platforms such as IBM Watson, interest in all things relating to cognitive computing is running high. But actually building these applications is a major challenge because identifying the relationships between various facts and events requires building a library of terms and definitions that applications can leverage to make an actual cognitive recommendation.

To help address that issue, ABBYY, a provider of data capture software, this week launched an ABBYY Compreno suite of software tools that leverages ontology and machine learning algorithms to enable developers to create analytics applications that understand the context between facts and even entire story lines within a text document.

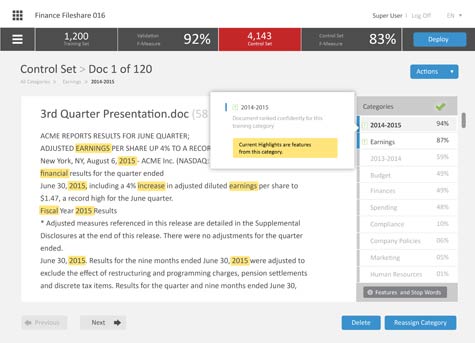

David Bayer, vice president for the Compreno Suite at ABBYY, says ABBYY Compreno consists of an ABBYY InfoExtractor software development kit based on REST application programming interfaces that make it simpler to embed this capability in a cognitive computing application, and ABBYY Smart Classifier, an application that makes it simpler to organize various classes of data.

In general, Bayer says, there are two approaches to building cognitive computing applications. The first employs a statistical model, while the second makes use of linguistics to more accurately capture the relationship between different passages of text.

Regardless of the approach taken, IT organizations trying to build cognitive computing applications are spending an inordinate amount of time building the content libraries these applications rely on to analyze text. The number of cognitive computing applications that actually make it into production should substantially grow once the time required to build those content libraries is substantially reduced.