Click through for nine easy to understand facts about why there's so much hoopla about Hadoop.

Hadoop is a way to store large amounts of data – and by large, we mean terabytes and petabytes of data. To give you some perspective on how much data that is, What's A Byte defines a terabyte as approximately a trillion bytes – enough to hold 1,000 copies of the Encyclopedia Britannica. A petabyte is approximately 1,000 terabytes and can hold 500 billion pages of standard printed text. Hadoop's storage system is called Hadoop Distributed File System. Obviously, that isn't your typical amount of data. Hadoop is used when you need to deal with machine-generated data.

Hadoop isn't just storage. The second part of Hadoop is MapReduce, which can process the data stored in the Hadoop Distributed File System. Essentially, MapReduce “divides and conquers” the data, according to Hadoop creator Doug Cutting. It allows your computations to run on the node where the data is local. It can process a whole data set that's spread across hundreds, even thousands, of computers in parallel chunks. MapReduce then collects and sorts the process data.

Google, Twitter, Facebook and other high-volume sites use Hadoop to store and analyze their Web log data.

Not only can Hadoop process massive amounts of data, it does so at impressive, record-breaking speeds. In fact, Hadoop holds the world record time for sorting the largest amount of data after Yahoo's Grid Computing team used it to sort one terabyte of data in 62 seconds and one petabyte in 16.25 hours.

With Hadoop, you can take a standard PC server and connect it to one hundred, several hundred or even thousands of other servers. Hadoop distributes the data files across these nodes, using them as one large file system to store and process the data. That's why it's called a distributed file system. The record number of nodes to date is 4,000 servers, all functioning as a unit using Hadoop. This means if your data maxes out the capacity of your hardware, you simply add more nodes.

Relational databases tend not to scale to the size required for large data sets. This makes Hadoop great for situations in which you can't use a relational database.

Hadoop is cheap for two reasons. First, it's open source, which means no proprietary licensing fees. Hadoop is an Apache Software Foundation project, created by Doug Cutting and Mike Cafarella, who implemented an idea described in papers by Google. Apache maintains a list of Hadoop distributions, and IBM has announced plans to offer its own distribution.

Second, Hadoop doesn't require special processors or expensive hardware. As Cutting explained, you just need “something with an Intel processor, a networking card, and usually four or so hard drives in it.”

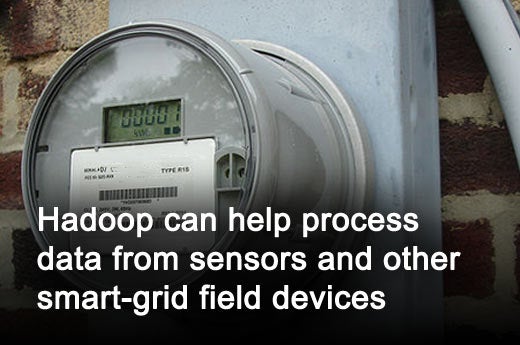

One innovative way companies are putting Hadoop to use is to analyze the data stored in sensors. The Tennessee Valley Authority uses smart-grid field devices to collect data on its power-transmission lines and facilities across the country. These sensors send in data at a rate of 30 times per second – at that rate, the TVA estimates it'll have half a petabyte of data archived within a few years, according to CNET. The TVA uses Hadoop to save and analyze that data, which means the TVA can identify potential grid problems before power failures occur.

A recent survey of 102 Hadoop developers by Karmasphere showed the top reason for using Hadoop is to mine large data sets for improved business intelligence. Increasingly, third-party companies are coming up with solutions that allow you to integrate Hadoop's data with relational databases and BI systems. Cloudera, Pentaho, Talend and Netezza are among the companies offering solutions to simplify different parts of the integration process. IBM has announced a plan for similar solutions based on its own Hadoop distribution.

Previously, you needed Java programmers to custom write this sort of integration, according to Pentaho's founder and CEO Richard Daley.

Given that the basic concept behind Hadoop came from Google, it's no surprise that online companies are putting Hadoop to use for Web content. Hadoop can be used to analyze historical user data, allowing Web advertisers to more accurately target readers with advertisements. It's also being used to create more relevant search results, according to Doug Cutting.

Hadoop’s ability to quickly and cheaply sort large amounts of data is allowing many businesses to make smarter decisions with more specific information. For instance, some banks use Hadoop to process credit ratings with internal financial data to better determine whether customers are a good credit risk. This allows them to more accurately identify which customers should be given loans and which should not – a decision that can have a huge effect on the bank's bottom line. Another, more unusual, example of how Hadoop supports smarter business: eHarmony reduced its costs by using Hadoop and MapReduce, running on top of Amazon Web Services, to match clients.