There’s been a lot of ongoing debate over the degree to which traditional data warehouses are still relevant. Organizations on the one hand still need to have a central repository to analyze data, but for many organizations that means creating a data lake based on a platform such as Hadoop. Snowflake Computing, a provider of a relational database service hosted in the cloud, is making a case for rethinking the role of the data warehouse in the age of the cloud following the launch today of Snowflake Data Sharing.

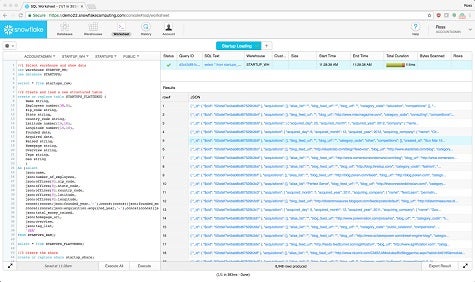

Jon Bock, vice president of marketing for Snowflake Computing, says Snowflake Data Sharing is designed to make it simple for IT organizations to provide external users with access to structured or semi-structured data without requiring them to hire programmers to provide access to data using application programming tools. In contrast, Bock says, Snowflake Data Sharing enables IT organization to share data sets using a simple set of administrative tools without having to copy or move data.

“We’re trying to help organizations get all the plumbing out of the way,” says Bock.

Snowflake Data Sharing is built on top of a relational database that Snowflake Computing makes available as a cloud service running on Amazon Web Services (AWS). While competition across the database-as-a-service segment of the cloud is clearly fierce these days, Bock says Snowflake Computing is gaining ground because it provides a familiar set of SQL-based tools running on a relational database that can invoke massive amounts of data stored on AWS.

Most organizations have come to recognize the potential value of the data they collect. But monetizing that data requires finding some way to share it. The requirement, in turn, has given rise to what’s known as the API economy. The point Snowflake Computing is making is that building an economy around sharing data using low-level APIs does more to inhibit the growth of a data economy than it does to enable it.