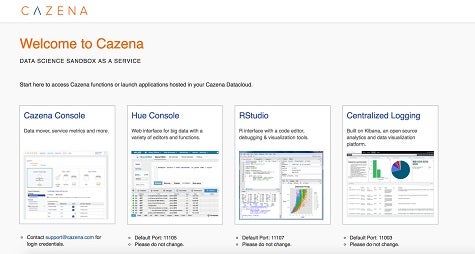

Because of what’s becoming a chronic shortage in Big Data expertise, more organizations are starting to rely on service providers to not only host their data, but manage it. This week at a Spark Summit East conference, Cazena took that concept a step further by taking the wraps off a Data Science Sandbox as a Service that makes it possible for data science professionals to create their own sandboxes without any direct intervention required from an IT department.

Cazena CEO Prat Moghe says Cazena often works with internal IT organizations to ingest data into its cloud service. But once it’s there, many organizations want data scientists to be able to flexibly self-service their own data needs, says Moghe. That approach gives data scientists more control over the overall environment without requiring them to wait for someone from an internal IT department to service their requests.

“There’s often an impedance mismatch between data scientists and IT,” says Moghe.

Moghe says the new service, based on an implementation of the open source Apache Spark 2.0 in-memory computing framework, allows data scientists to employ R, Python or SQL programming languages to manipulate data.

Many Big Data projects get started on-premises to prove the concept. But once an organization decides that the return on investment in a Big Data project merits further investment, they are quickly presented with a variety of challenges associated with managing a Big Data deployment in a production environment.

Obviously, it’s still early days for most organizations when it comes to Big Data projects. But arguably, the most important question to address is the precise division of labor they want to see between data scientists and internal IT departments. Many of those organizations may decide that limited internal IT resources might best be employed elsewhere.