Business intelligence applications and spreadsheets have historically been used as decision support tools. Business decisions weren’t necessarily based on what showed up in these applications as much as they were used to support decisions that a business leader intuitively felt were right. But with the rise of more advanced analytics applications, there’s a lot more awareness of the need to make fact-based decisions across a much broader range of data sources. In that context, the quality of the data being used to drive those analytics needs to be a lot more pristine.

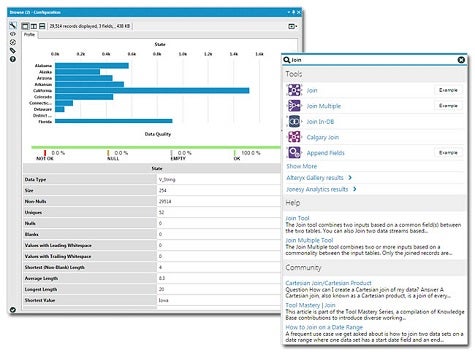

To enable end users to better maintain data quality without requiring any help from an internal IT organization, Alteryx in the latest release of Alteryx Analytics has included data profiling tools that make it much simpler for end users to discover on their own when there are issues with the quality of the data being analyzed.

Bob Laurent, vice president of product marketing at Alteryx, says that as line of business executives begin to rely more on advanced analytics, their tolerance for poor data is dropping.

“They want to know they are making decisions based on sound insights,” says Laurent.

Exactly who is responsible for data quality in an organization has long been a point of contention between IT and the business. IT staffs can set up applications. But the data that gets entered is usually the responsibility of the line of business. This latest release, says Laurent, gives end users the tools they need to better ensure the quality of their data.

Alteryx provides line of business users with both an analytics application and a local database. With these releases, Alteryx is adding a data connections manager as well as auditing tools designed to address audits associated with any data governance process. In addition, Alteryx now includes connectors to 75 different data sources, including now Microsoft SQL Server and Oracle databases.

In general, data quality is becoming a bigger issue as organizations look to automate processes using analytics applications that have been infused with machine and deep learning technologies. But if the analytics being applied locally by end users is flawed because of poor data quality, then the chances are high that any automated process that depends on those analytics is likely to lead to a flawed outcome at levels of scale that might prove very hard to undo indeed.