Snowflake Computing today unfurled an automated service that makes it possible to ingest data in near real time into its namesake data warehouse as a service hosted on Amazon Web Services (AWS), thereby eliminating the need to manually manage extract, transform and load (ETL) processes.

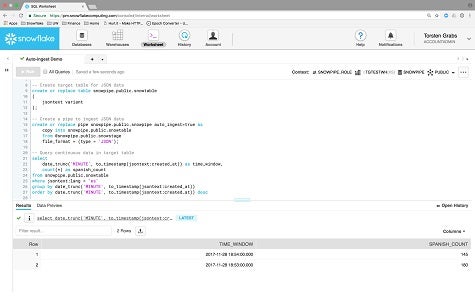

Matt Glickman, head of product for Snowflake Computing, says the Snowpipe service makes use of Amazon Kinesis, a real-time streaming data service, to extract data from multiple sources. That capability means that analytics applications running on top of the Snowflake relational database can invoke data stored in a data warehouse access data stored in the Amazon Simple Storage Service (S3) in near real time.

Glickman says Snowflake Computing is able to provide this capability in part because of a previous decision to add support for a serverless computing framework on AWS. That capability makes it possible for Snowflake Computing to seamlessly add capacity as additional data streams into the data warehouse, says Glickman.

“It’s all automated,” says Glickman. “There are no batch processes.”

To further facilitate the transfer of data into its data warehouse, Snowflake Computing earlier this week announced support for AWS PrivateLink, a virtual private network for connecting to AWS that doesn’t rely on the public internet.

The Snowflake service provides an alternative on AWS to the AWS Redshift data warehouse service, which functions more like a managed hosting service that requires customers to make specific commitments to the amount of storage they will consume. In contrast, Glickman says the Snowflake data warehouse is a true cloud service that IT organizations can consume on a per-second basis.

Rather than storing data in platforms such as Hadoop, Snowflake Computing has been making a case for employing a traditional relational database running on AWS that has been expanded to handle massive amounts of data. That approach allows IT organizations to take advantage of cloud economics without having to acquire additional hardware and associated Hadoop expertise.

It remains to be seen just what percentage of data warehouse applications will move to the cloud. But the one thing that is certain is that data gravity is a powerful force. The more data that gets created or shifted to the cloud, the more likely it becomes that the data warehouse will be moved to be closer to that data.