Machines eventually fail, but rarely does it just suddenly happen. In reality, there are hundreds, possibly even thousands, of events hidden in machine data that signal when a machine is going to fail. The challenge has been finding a way to capture all those events in a cost-effective way that would allow organizations to analyze them events with an eye toward predicting when a particular machine or device might fail.

With that goal in mind, GE Intelligent Platforms today launched a Proficy Monitoring and Analysis suite of Big Data analytics applications based on Hadoop.

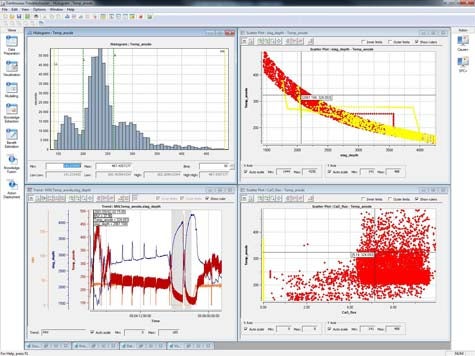

According to Brian Courtney, general manager for industrial data intelligence at GE Intelligent Platforms, machine data can reliably predict when a machine is in the early stages of failure. That information then allows the owner of the machine to apply predictive maintenance in a way that avoids costly downtime.

As part of the deployment process, GE works with organizations to set up Proficy Monitoring and Analysis so that GE essentially functions as the organization’s data scientist. Once the software is up and running, GE then hands off the management of the Big Data application to the customer.

Customers have the option of then deploying Proficy Monitoring and Analysis on premise, using the Pivotal platform-as-a-service (PaaS) platform that GE is setting up on Amazon Web Services or any other cloud computing platform that is compatible with the Cloud Foundry PaaS platform developed by Pivotal.

Courtney says that from an IT perspective, the biggest challenge with Big Data analytics is convincing managers on a factory floor that it’s possible to predict when a machine will fail. But once everyone comes to terms with the idea that there are signals in the machine data that are indicators of imminent failure, interest in maintaining factory uptime usually carries the day.

Proficy Monitoring and Analysis software is the latest instance of what GE describes as a concerted push into an Industrial Internet that is based on an “Internet of Things” phenomenon, which industry analysts predict will be a $514 billion market by 2020. Of course, none of that will mean a thing if we can’t find a way to practically apply any of that data all those devices are about to generate.