Companies are placing more reliance on IT teams everyday, from securing their data to finding new ways to increase productivity. To make your IT team even more successful, you need to find ways to automate processes and optimize the department. IT Management software provides a suite of tools to fully exploit your technology resources, ensure you’re using the best technology practices, and generate the best products or services your company has to offer.

To make your search for the best IT Management software easier, we’ve broken this list down into three different categories. To be considered for this list, vendors had to offer several different types of resource management (compliance, inventory, etc.), have good user reviews, and have both cloud and on-premise implementation options. There are also many different software types that make up the IT management landscape, so we’ve noted what each software is next to the name.

Table of contents:

Top IT management software for SMBs

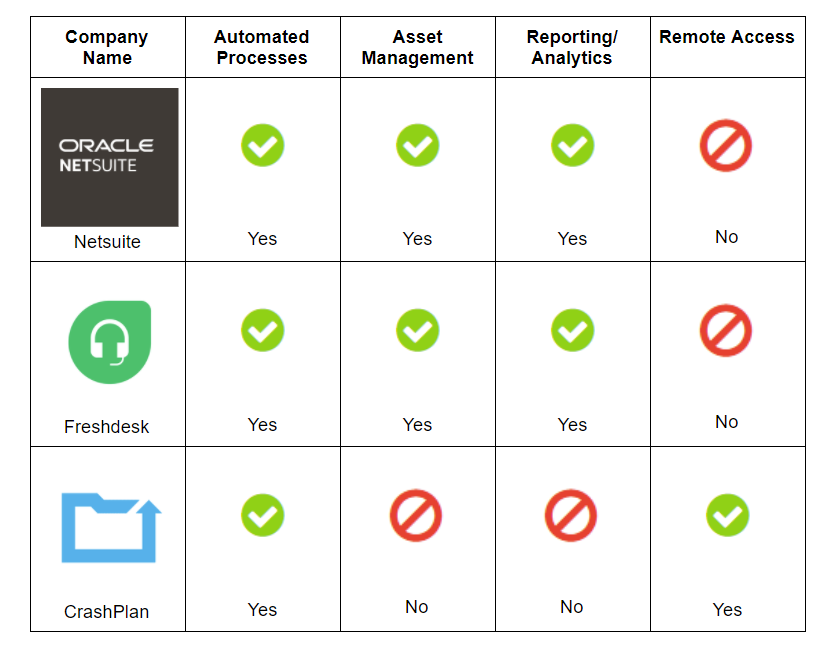

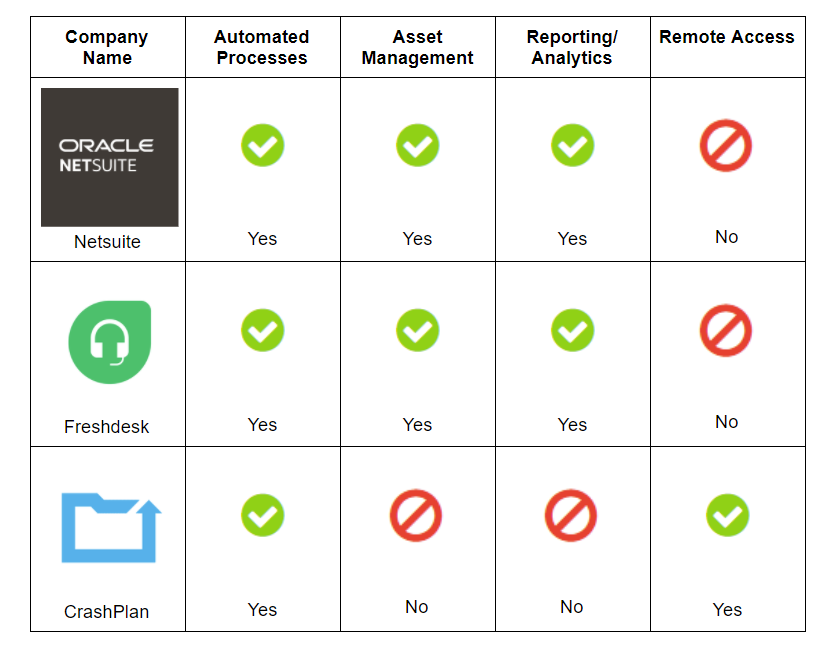

Small businesses may not have a fully-staffed IT department, so they may rely more heavily on automated processes. IT management software that emphasizes automation can help SMBs grow and scale with the IT resources they currently have available.

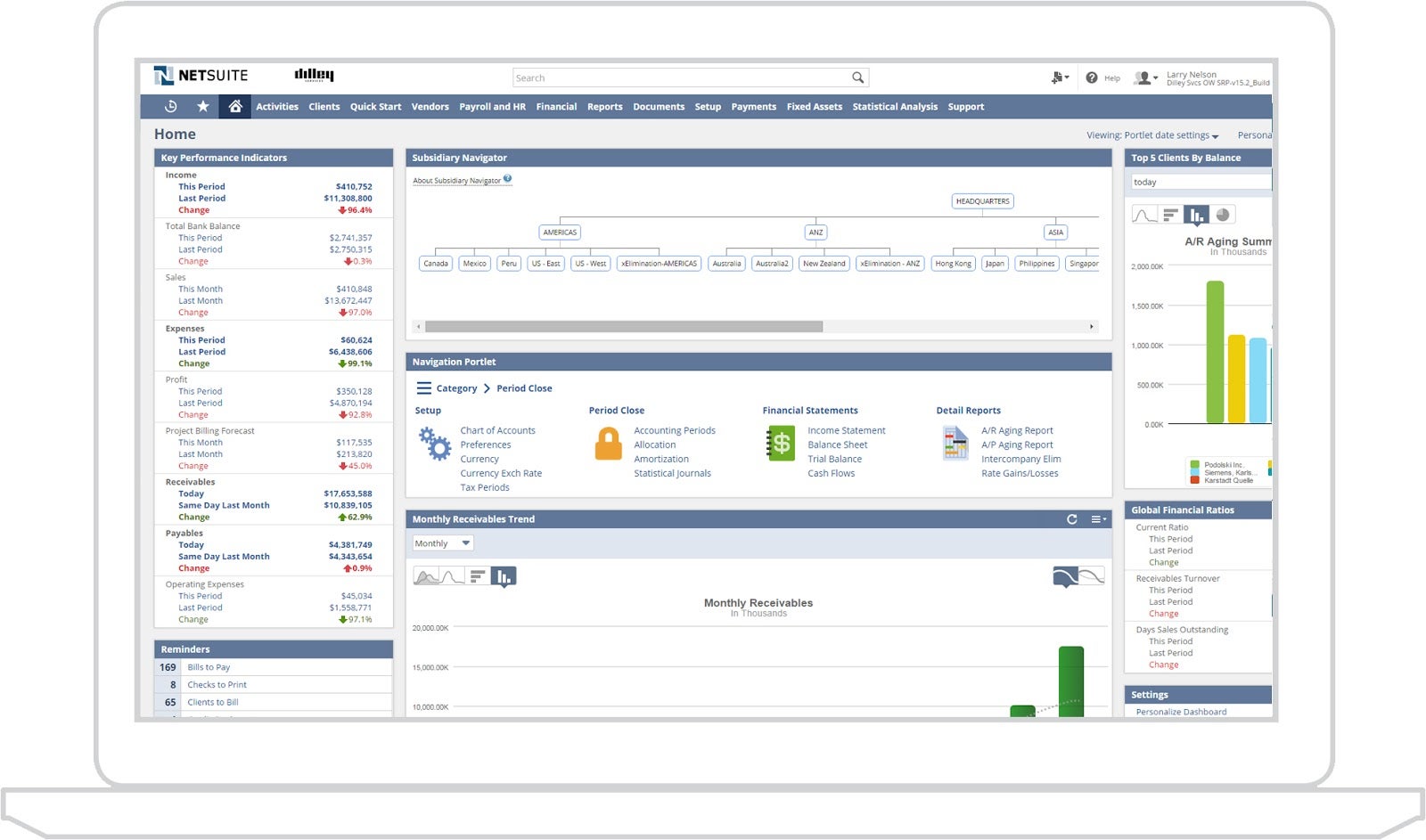

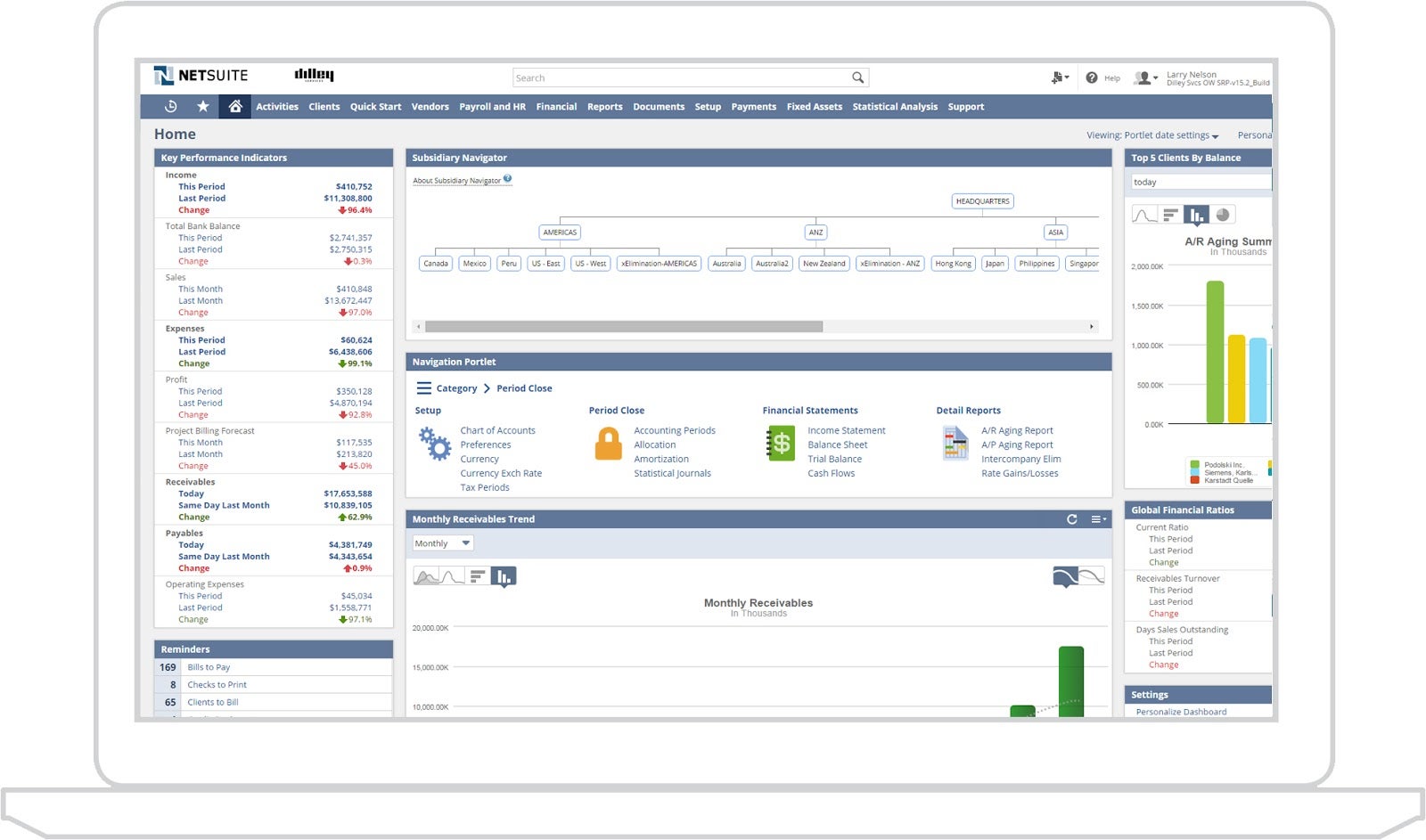

Netsuite Enterprise Resource Planning (ERP) Software

When you don’t have a large IT department, planning out your technology resources can be a difficult task. Netsuite can identify which resources are the most valuable and assign them to the projects that need them the most. The software is cloud-based, so it’s easy to set up and it won’t take up valuable server space on your premises.

Netsuite also automates some of your repetitive backend processes, so your IT team doesn’t have to spend extra time tending to them. Additionally, the software prioritizes tasks to tell your IT team where they should place most of their energy. It also ensures that those processes are visible across different departments to keep everyone on the same page about which tasks are being given priority.

Netsuite also automates some of your repetitive backend processes, so your IT team doesn’t have to spend extra time tending to them. Additionally, the software prioritizes tasks to tell your IT team where they should place most of their energy. It also ensures that those processes are visible across different departments to keep everyone on the same page about which tasks are being given priority.

Netsuite’s approach focuses on Software-as-a-Solution (SaaS), so the platform can handle mundane tasks while your IT department focuses on revenue-increasing activities. By automating repetitive tasks like password changes, the software frees up your IT team for more important work and helps make your business more efficient overall. Additionally, Netsuite offers a variety of other software solutions, so all of your systems can easily work together.

Netsuite customer complaints

Unfortunately, the software sometimes runs slowly, which means it isn’t saving companies as much time as it should be. It’s also fairly difficult to customize the software to meet your business’s needs. Most modules come standard, so you’ll have to do extra work or spend extra money to make them fit your company.

Also Read: Oracle Netsuite Looks to Bring AI to SMBs

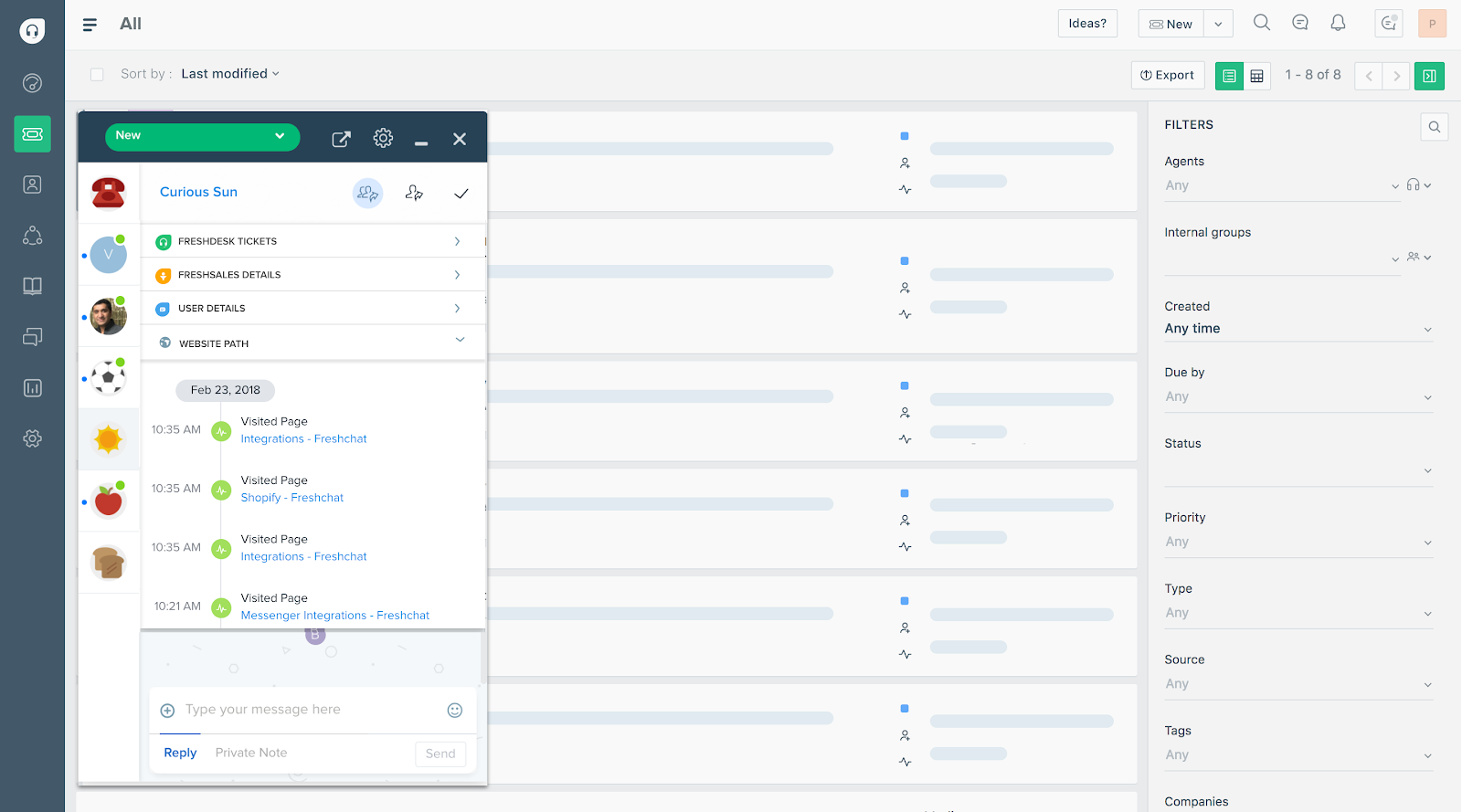

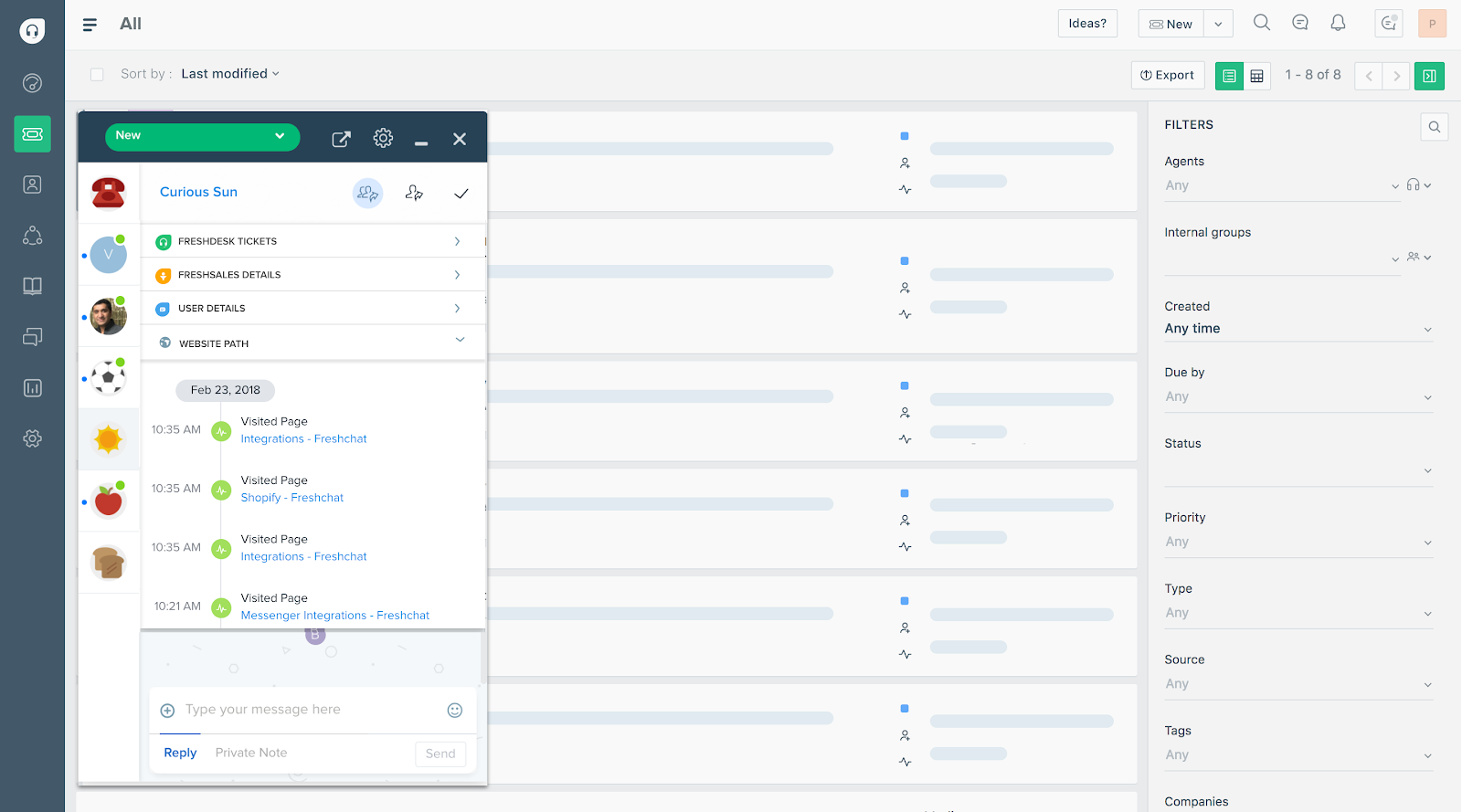

Freshdesk Service Desk Software

With small businesses, you can’t always offer round-the-clock support to your employees or customers. Luckily, Freshdesk can help with this in a couple of ways. First, the system simplifies the ticketing process by combining everything into one inbox that your team can work from. It automatically detects and alerts you when two agents have started working on the same issue, so you won’t waste time double-responding to tickets. You can also set deadlines for ticket response and resolutions to keep things moving quickly.

Additionally, Freshdesk offers an AI chat option, so your customers and employees help themselves and reduce the time your agents have to spend with them. Their Freddy AI solution delivers automatic and precise answers to questions for faster resolutions. In the knowledge base, Freshdesk provides automatic suggestions to help clients and team members find answers faster.

Additionally, Freshdesk offers an AI chat option, so your customers and employees help themselves and reduce the time your agents have to spend with them. Their Freddy AI solution delivers automatic and precise answers to questions for faster resolutions. In the knowledge base, Freshdesk provides automatic suggestions to help clients and team members find answers faster.

By optimizing your service desk, Freshdesk frees up your IT team to work on larger company initiatives and provide better service to other departments. There’s also a trial period, so you can test out the system for free without having to commit to anything.

Freshdesk Service customer complaints

The spam filters in the software could be more robust as they don’t always catch suspicious emails. The reporting features also leave a lot to be desired as it doesn’t provide an hourly view of traffic, which makes it hard to predict when traffic will spike during the day.

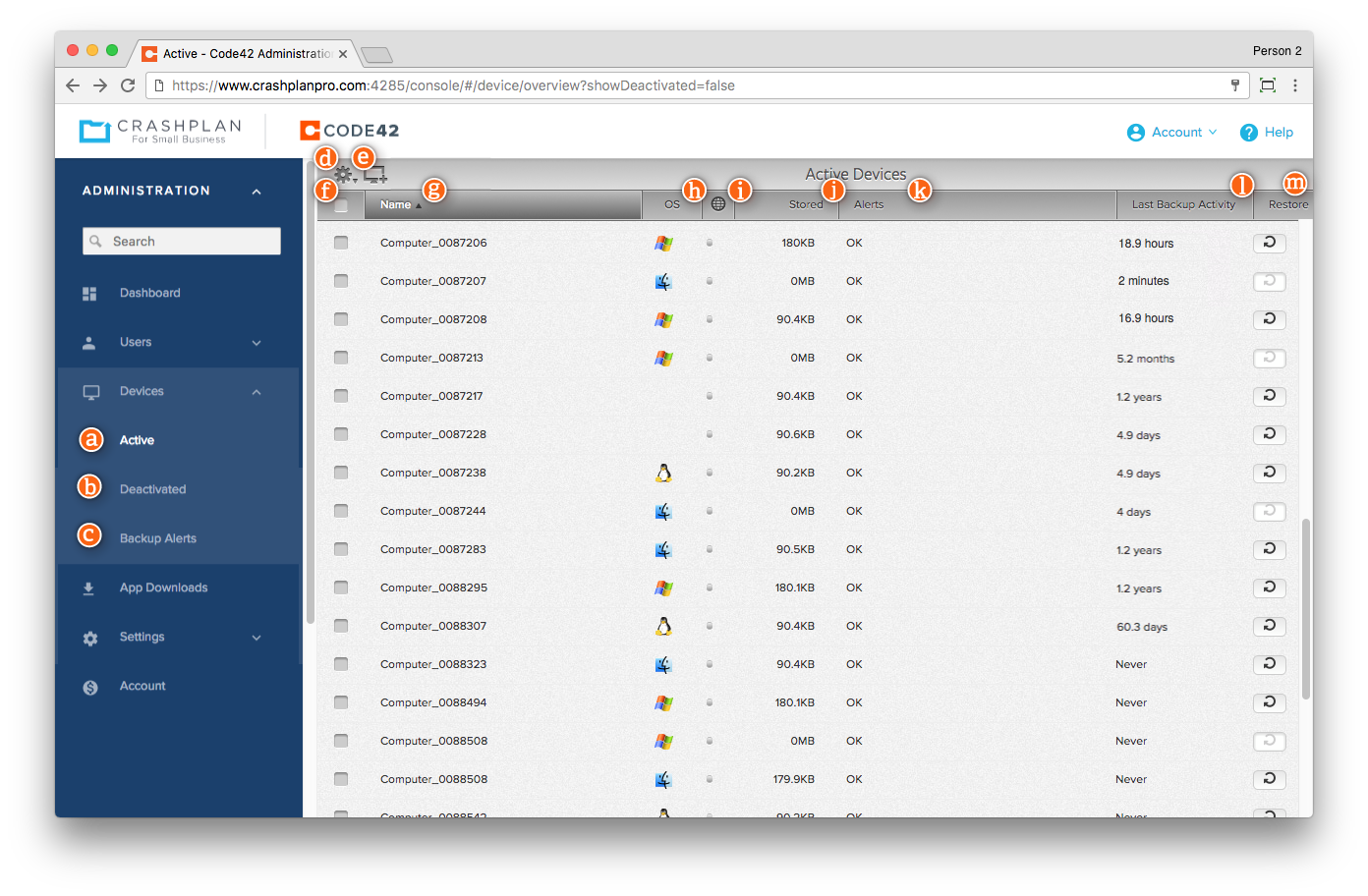

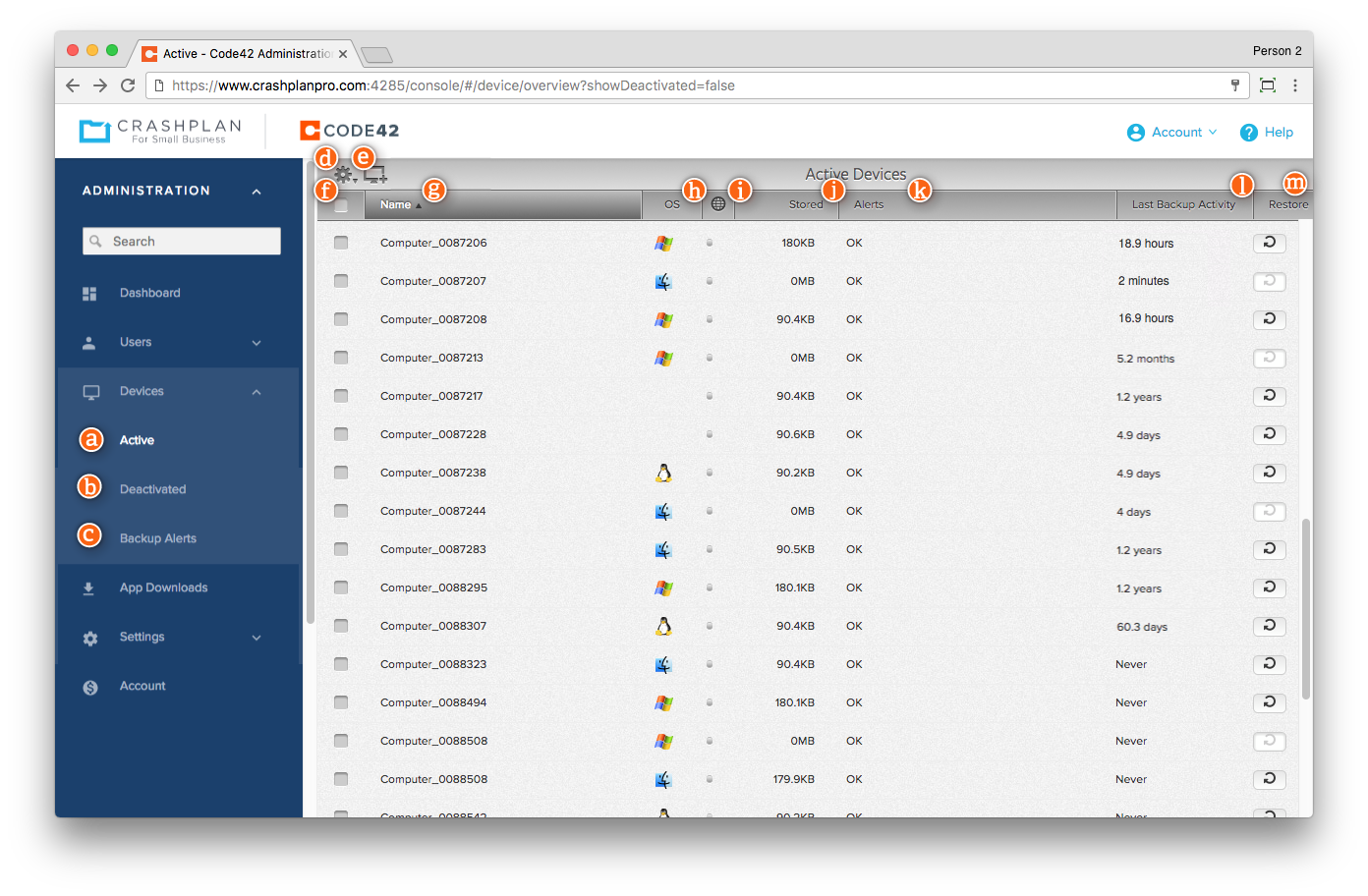

CrashPlan Cloud Backup Software

CrashPlan ensures you have backups of all your data in the event of a disaster or ransomware attack. For a small business, even a small amount of lost data can be devastating. It could take a large amount of your IT team’s time to recover it, which could set you back on other projects. CrashPlan automatically backs up your data and stores it on the cloud to give you peace of mind.

Also Read: How to Create an Incident Response Plan

There are no file size restrictions. You can restore files from any device on your network. CrashPlan’s backups run automatically in the background, so you won’t have to spend extra time backing up your files or remember to do it. You can even customize how long CrashPlan stores your deleted files, so it’s easier to recover something if you realize you need it later.

A single ransomware attack can cost businesses up to $200,000 once everything is said and done. By having current backups of all your files, you can better protect yourself against ransomware and keep yourself from having to pay to recover your files. CrashPlan also offers dedicated support by chat and email with documentation to keep your business running smoothly.

CrashPlan customer complaints

CrashPlan’s pricing has increased quite a bit over the years, which may be prohibitive for some users. Additionally, the software uses a lot of system resources when backing up data.

Top IT management software for enterprises

Enterprise businesses need to ensure that their data is secure. IT management systems with an emphasis on security can help large businesses focus on growing and improving their products or services rather than protecting their clients’ information.

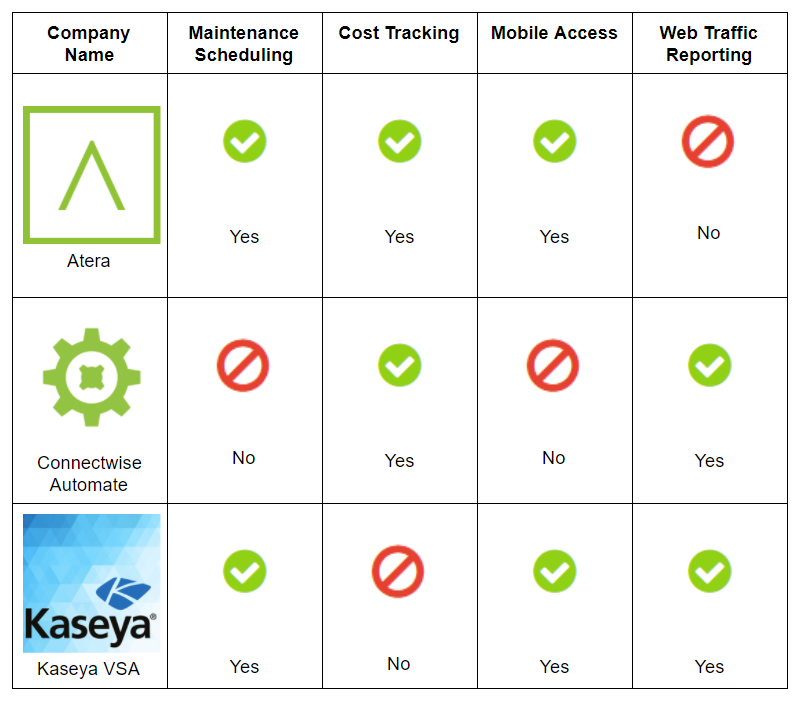

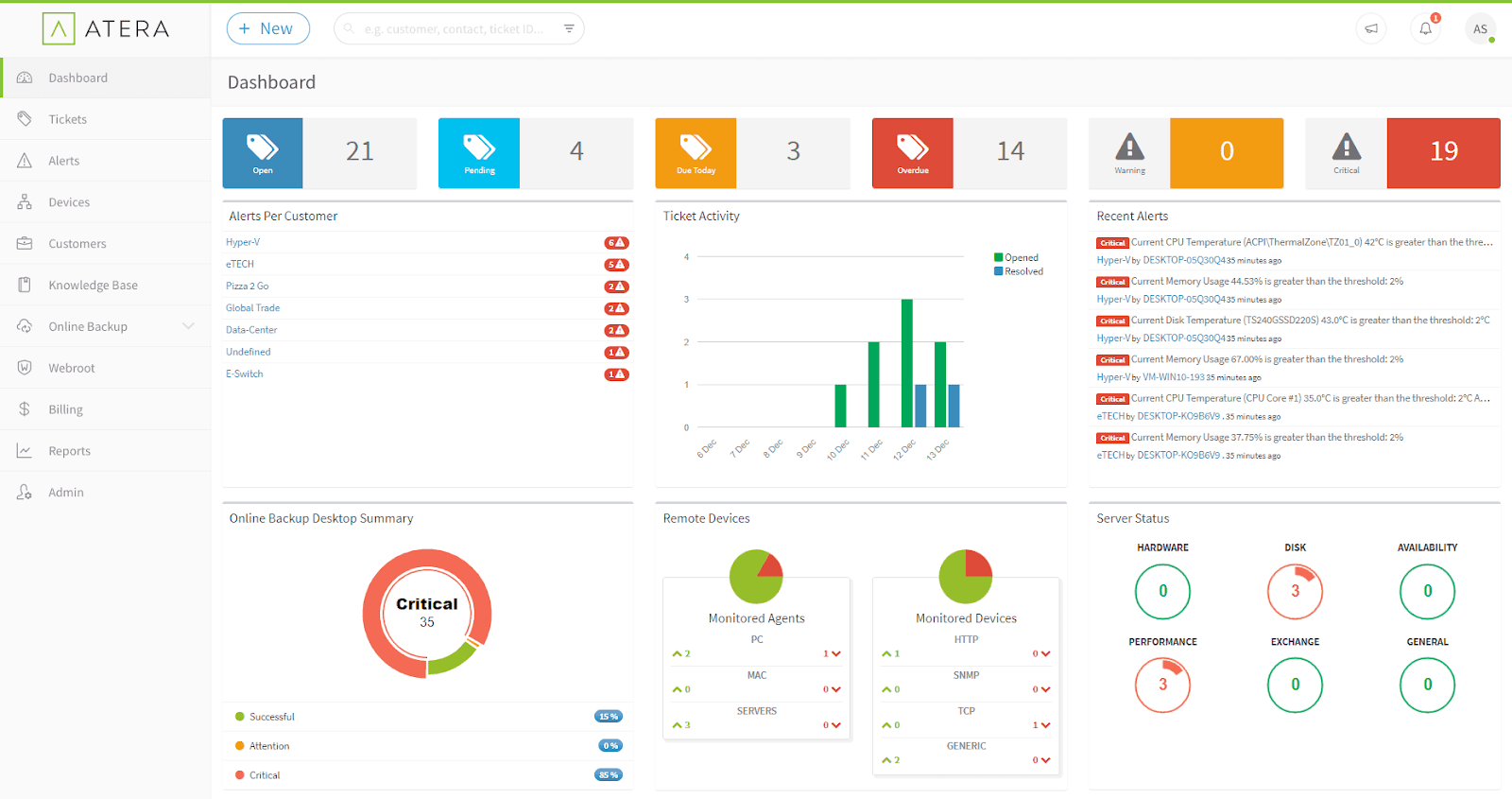

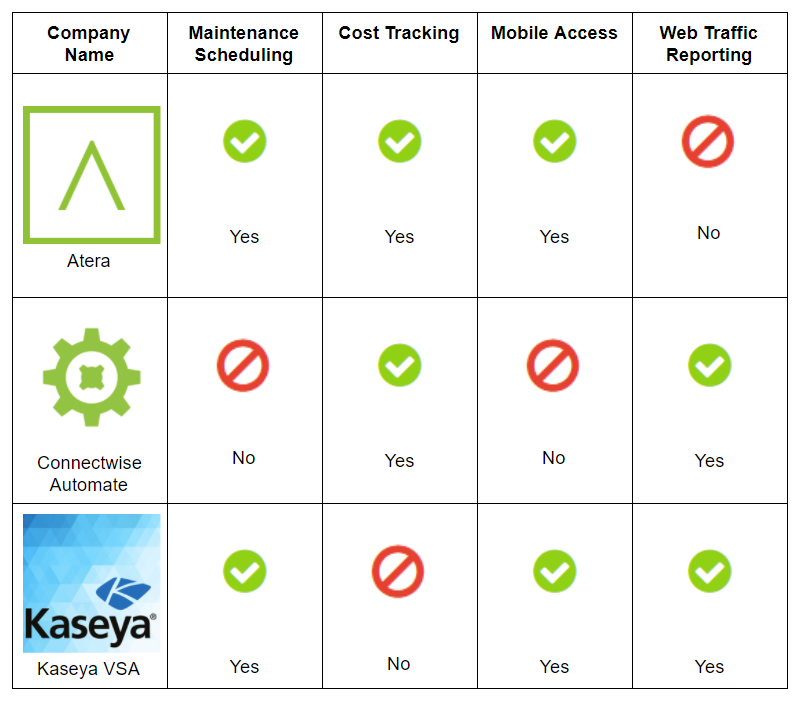

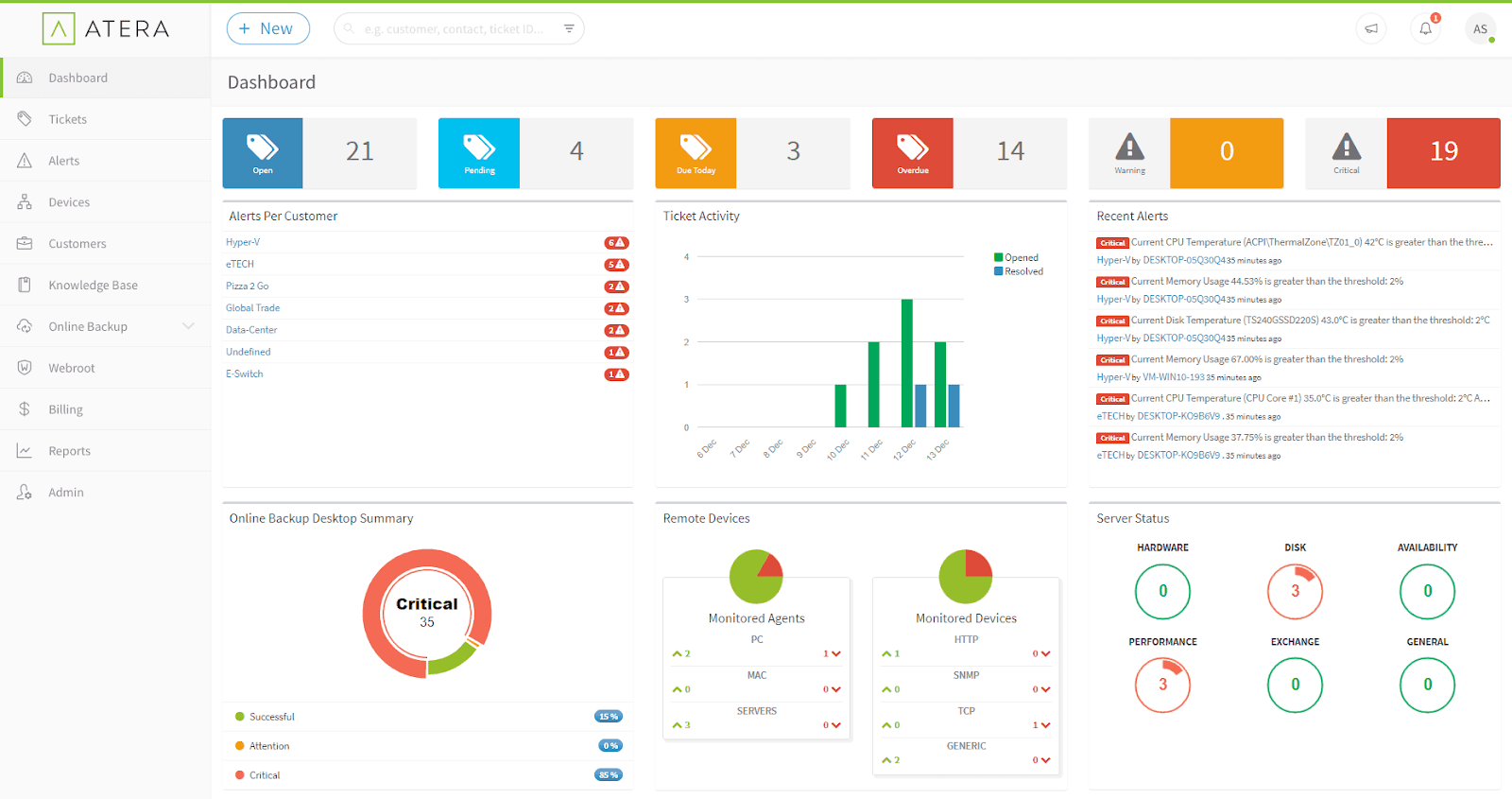

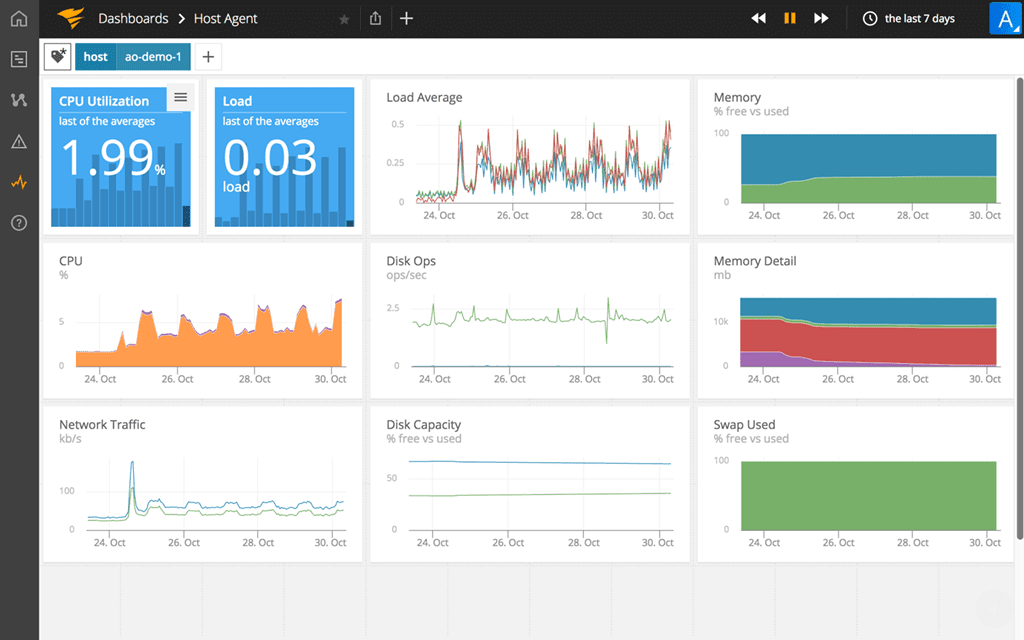

Atera Remote Monitoring and Management Software (RMM)

Large companies tend to have employees in a variety of locations around the world that need IT support. Atera offers remote monitoring and management options, so your IT team can keep an eye on operations wherever they are. Alerts are delivered in real-time. You’ll know about problems as they arise and can mobilize your IT team quicker to respond to them.

Not only can you monitor your system remotely, but you can perform remote maintenance as well. Atera provides an entire suite of remote maintenance services that let you uninstall applications, install necessary patches, and run a variety of commands and scripts. There’s also 24/7 live support to help you deal with issues whenever they arise.

Along with remote access, Atera provides IT automation for a variety of processes. The software can perform full system scans, check for updates, delete temporary files, and more to keep your system running smoothly without requiring additional work from IT. You can also customize which servers and workstations get these features.

Atera customer complaints

The patching system is a bit complicated because you can’t approve which patches should be installed; instead, you have to exclude patches you don’t want to be installed. The reporting features are also very basic and don’t allow for much customization.

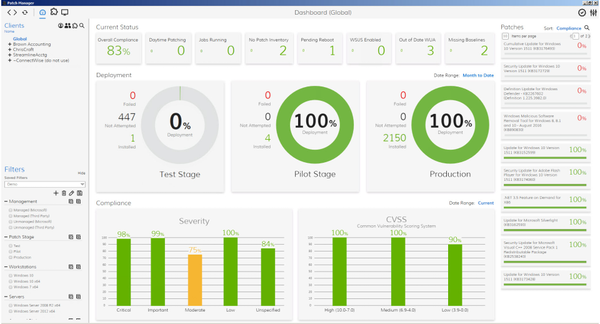

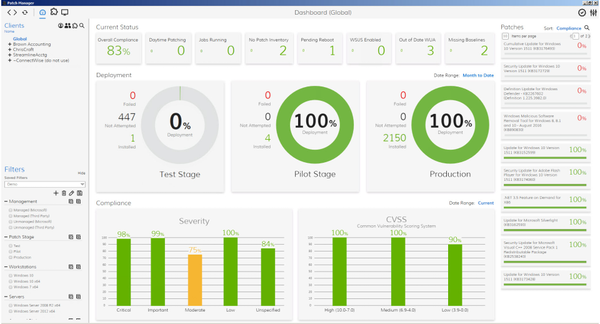

Connectwise Automate RMM Software

As a large organization, you have a ton of devices on your network and need to be able to get to them all in case of an incident. Connectwise Automate offers quick access to all of the endpoints on your network, so you can resolve issues quickly and keep any downtime to a minimum. The system even automatically scans the network to detect all devices and make sure every entry point is covered.

Connectwise can also help you automate repetitive tasks as the name suggests, so you can reduce operating costs and do more without increasing the size of your IT staff. Some of the jobs the software can automate for you include scheduling patches, generating random passwords, and the remote management of routers and similar devices.

With so much data on your servers, you also need to continually monitor your network for threats and other issues. Connectwise can help you find issues before they turn into something bigger by monitoring the network in real time and producing alerts as they happen. These monitoring systems help your IT staff be proactive about replacing or repairing machines.

Connectwise ustomer complaints

Customer support is a big issue for Connectwise because the support team is hard to contact and they have slow response times. There are also a ton of features which can create a steep learning curve.

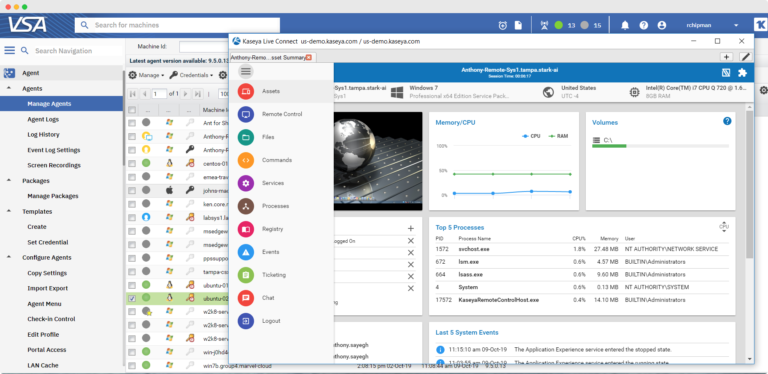

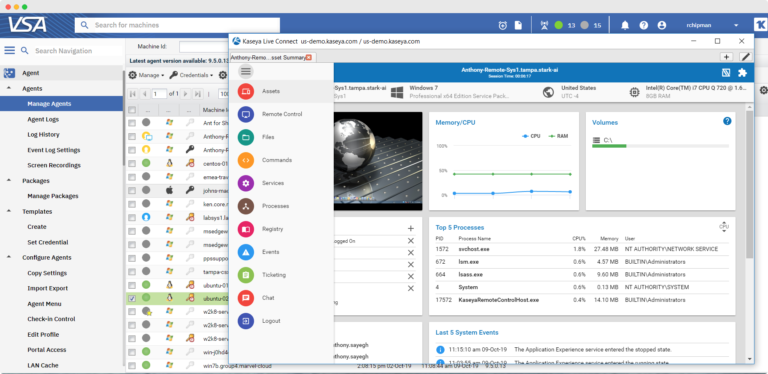

Kaseya VSA RMM Software

One of the biggest threats to IT security is human error. How many email phishing attempts does your workplace see each week? Kaseya VSA recently acquired Graphus, so their IT management suite now contains a powerful email security and phishing defense platform. This keeps your network safer from attacks, and it frees up your IT department’s time to focus on other issues.

Kaseya secures your network and makes your IT department more efficient. Your technicians get remote access to endpoints, so they can fix issues without disrupting the user. Additionally, the dashboard allows you to view and manage your entire IT infrastructure to keep everything up and running. Finally, by automating background tasks, you can have a higher ratio of endpoints to IT staff, so your operating costs are lower.

Kaseya also offers different add-ons you can incorporate into your package for a more robust IT management suite. Some of these features include antivirus and anti-malware software, a compliance manager for HIPAA and other regulatory bodies, and patch management for third party software.

Kaseya customer complaints

The user interface is old and clunky, which can cause problems for some users. Additionally, customer support is slow to respond and sometimes takes a few days to get up to speed on technical issues.

Also Read: Best IT Asset Management Software (ITAM) 2019

Customers’ choice – highest customer-rated software

Sometimes, reading other customers’ reviews is the best way to decide if a software is right for you. These three vendors all had excellent reviews and could be a good fit for a variety of companies.

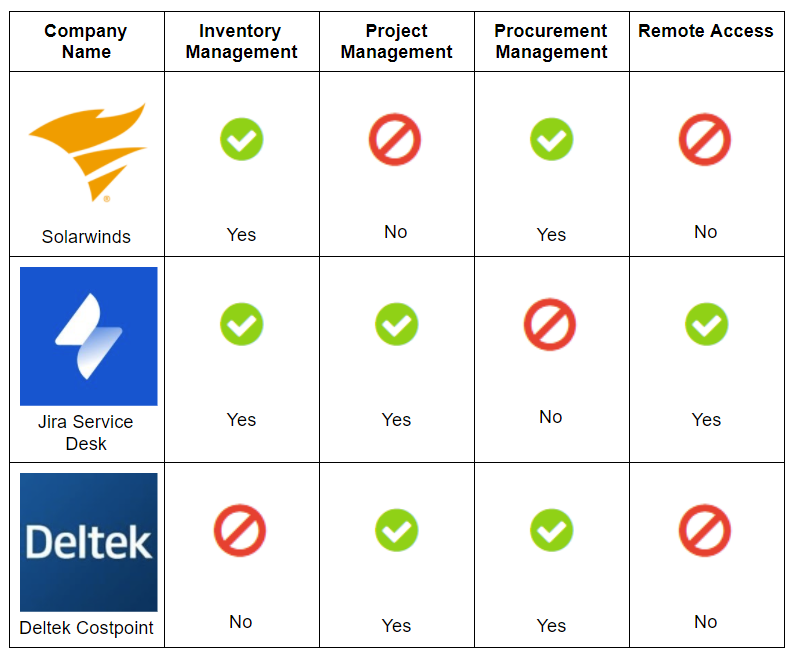

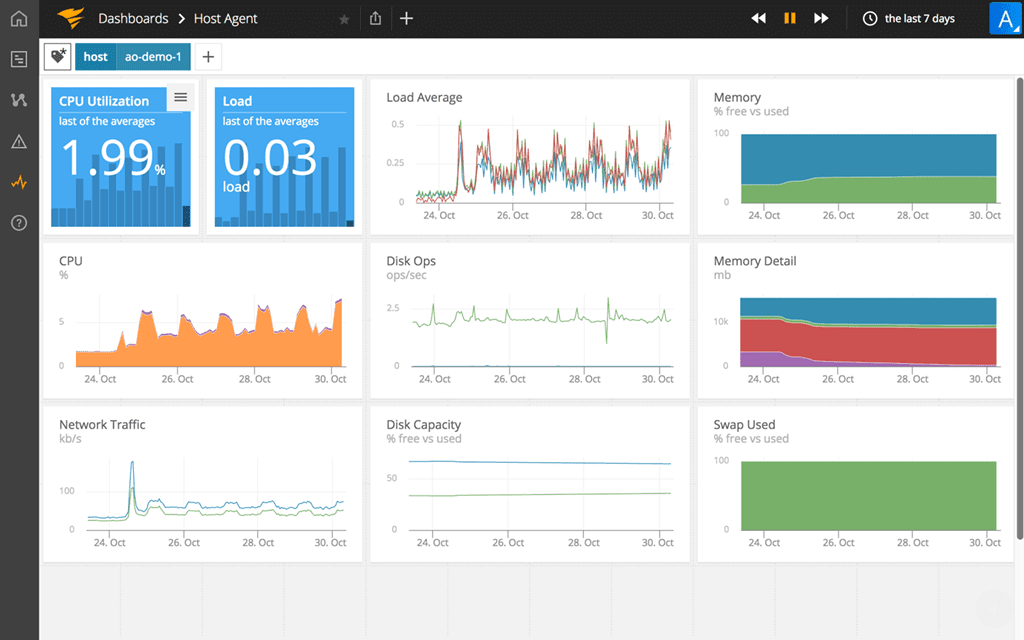

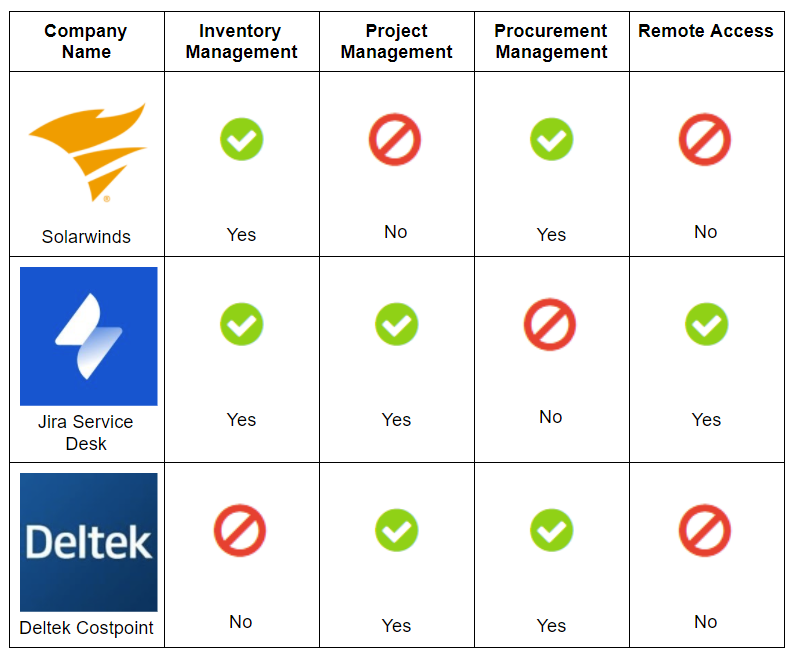

Solarwinds Managed Services Software

Solarwinds is a cloud-based IT management platform that works well for businesses of all sizes. The system consolidates support requests from a variety of sources, including email, ticket submissions, phone calls, and more into a single dashboard to increase response time. It also provides automated rules to get questions to the right people, so your IT team can resolve tickets more quickly.

The asset management dashboard shows you a consolidated list of all the devices connected to the network and their functionality. This program makes it easy to provide support and update software licenses because you can see the status of each device from a single screen. Solarwinds keeps downtime to a minimum, saving your company time and money on operating costs.

Each device on your network poses some level of risk, and it’s up to the IT team to minimize it. Solarwinds provides a risk management tool that keeps antivirus software up to date and identifies devices containing unauthorized software, so your IT department can identify risks quickly and remove them before they turn into major issues.

Solarwinds customer complaints

The reporting features Solarwinds offers are limited, and there aren’t many customization options available. Additionally, some of the features aren’t all that intuitive, so it can be difficult to to get comfortable with the software.

Also Read: 16 Best ITSM Tools 2019

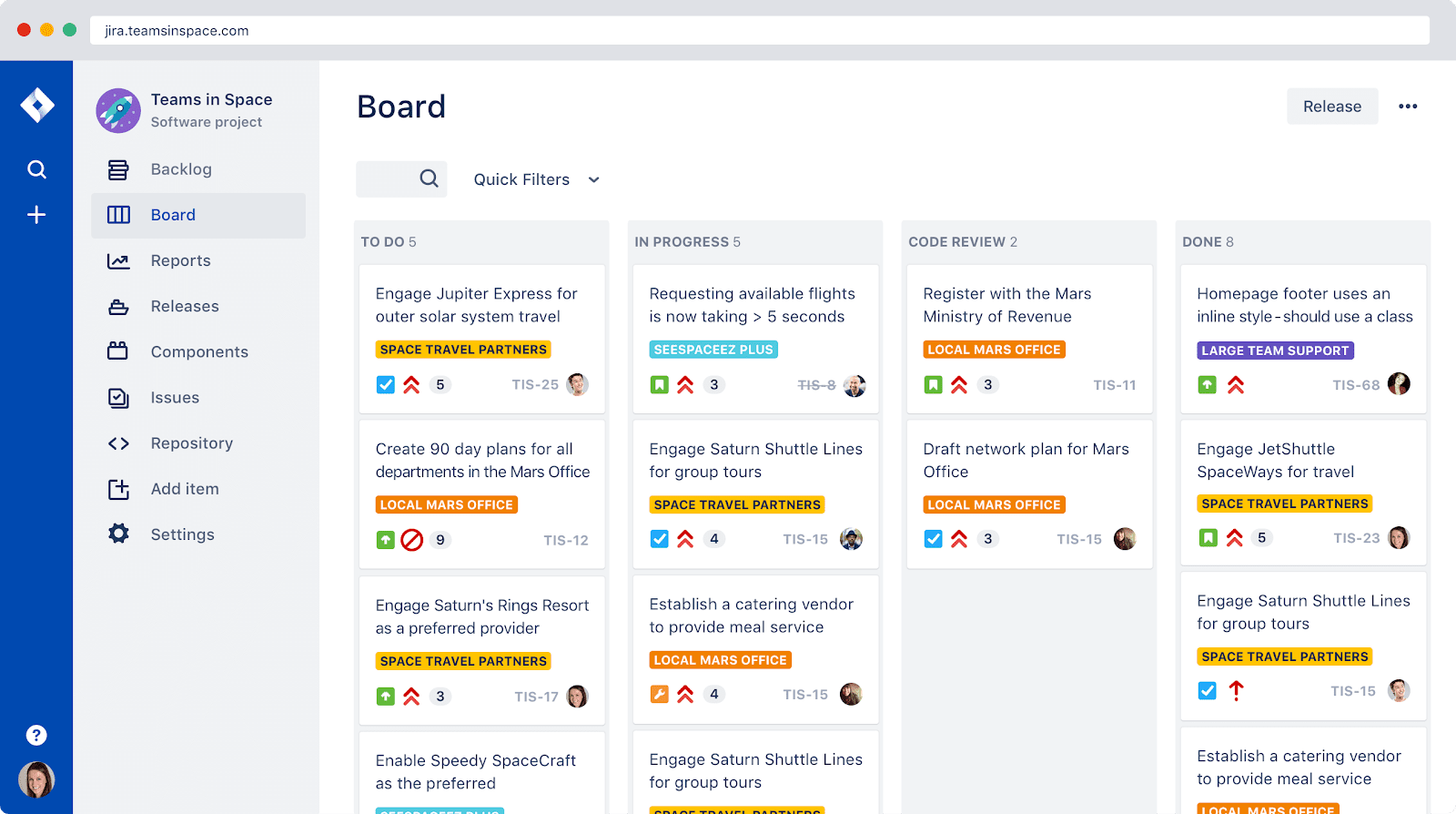

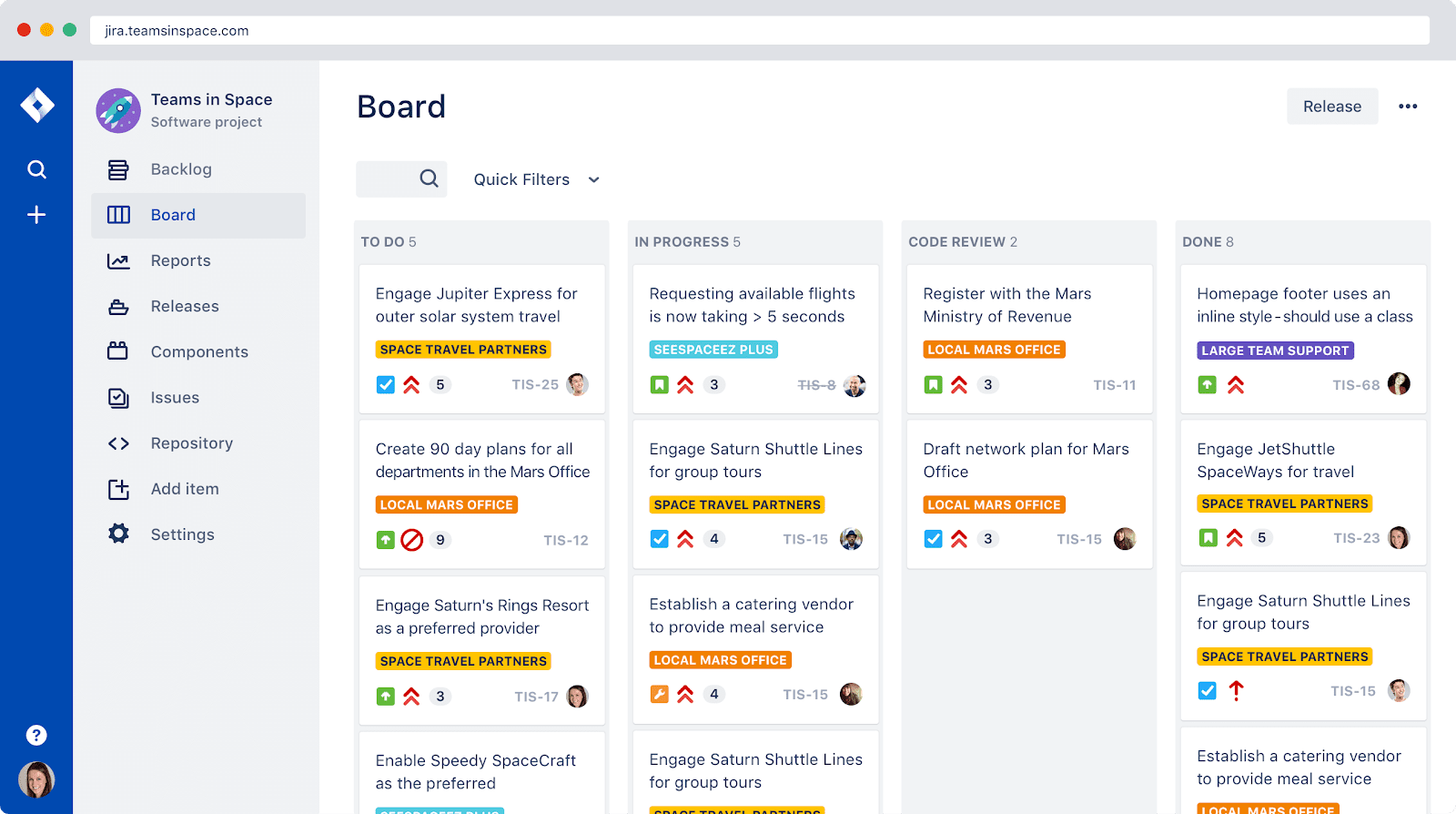

Jira Service Desk Software

Jira Service Desk is an IT management system that focuses on helping growing companies move away from email as a means of asking for technical support. In fact, Jira offers a self-service portal to help your employees solve problems without involving IT whenever possible. The knowledge base brings the most relevant results to the top based on what they search for, or, if they can’t find what they need, they can easily submit help requests.

The incident management program makes it easy to identify, resolve, and learn from incidents to keep your network safer. You can set automated rules, so the system automatically prioritizes incidents as they come in and assign them to a team member to handle. This way, your team gets the most important issues resolved faster. The system also integrates with a variety of tools that can help make your security responses even better.

Jira Service Desk also includes asset management. By tracking all of the different devices on your network, the IT team has context when a ticket is submitted or an incident is reported. With context, it’s easier for them to identify and fix the problem.

Jira customer complaints

Jira has a lot of features, which makes the learning curve fairly steep. There are tutorials available, but just know it’s going to take time to get used to the system. Additionally, the system might be cost prohibitive for smaller companies.

Also Read: Trello vs JIRA: 2019 Pricing & Comparison

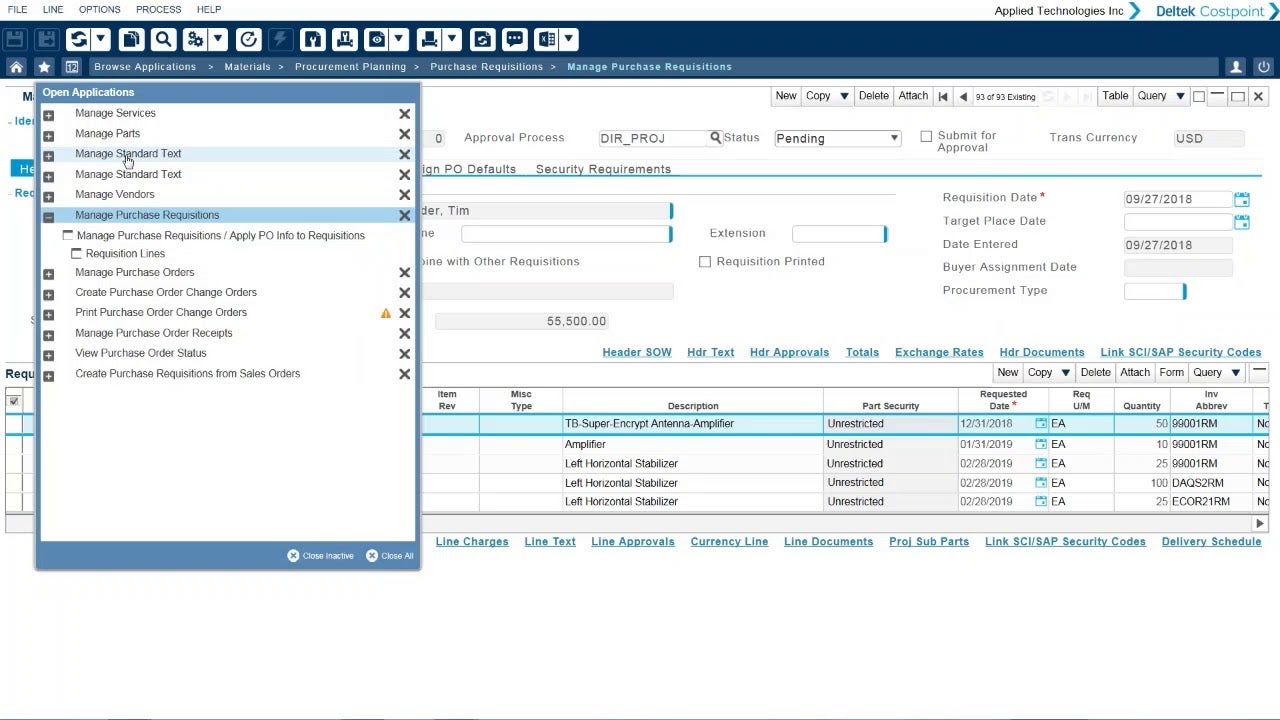

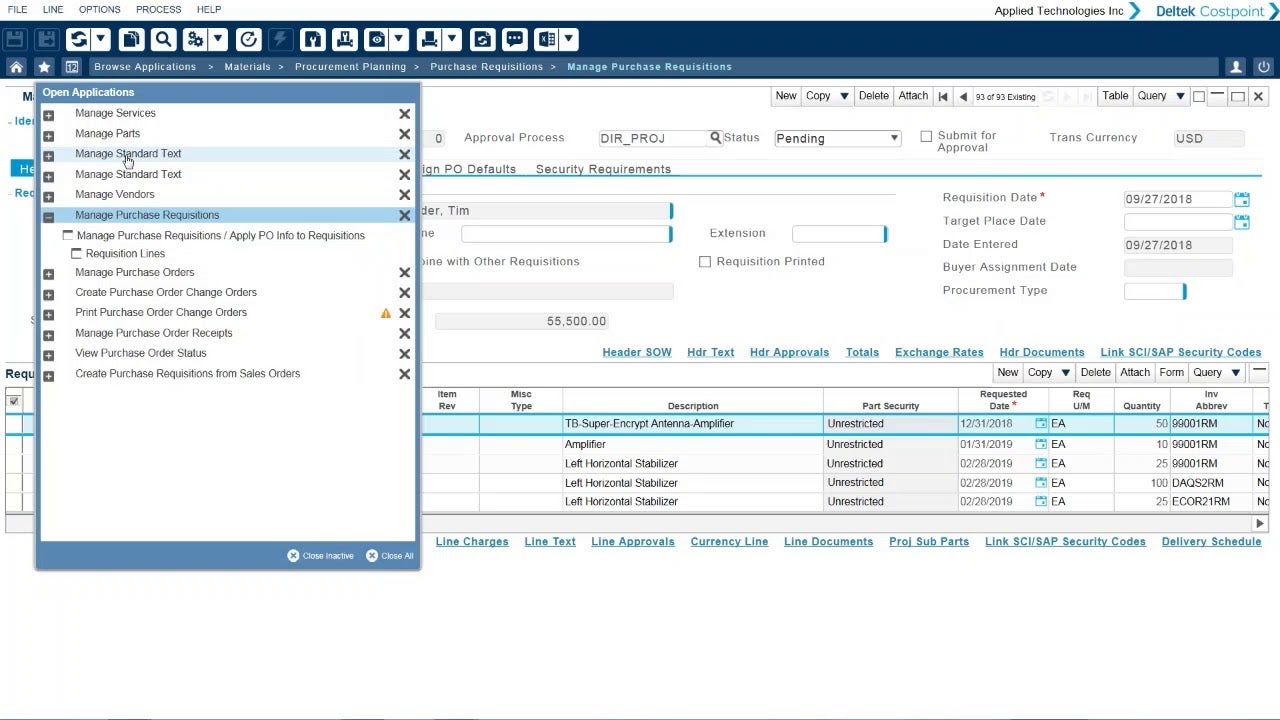

Deltek Costpoint ERP Software

Deltek Costpoint is a great option for project-based companies who need to optimize their IT department. In fact, it was specifically made for government contractors and works well for manufacturing businesses. They offer a whole business operations suite, but their IT management tools are still worth noting.

Deltek Costpoint digitizes internal processes to make them more flexible, allow for automation, and provide better visibility across your entire organization. By automating your backend processes, you can speed up the timeline of your projects and produce better results and free up your IT team to work on projects that increase revenue.

Because it’s built for government contractors, Deltek also comes with strict compliance support. Every transaction is traceable for better support and security. Plus, there are built in security features, like asset management, to keep your company compliant with government regulations.

Deltek Customer Complaints

One of the major issues is that if an employee isn’t assigned to a project for a while, access has to be completely reset by HR, which can slow down the process. The user interface also isn’t very intuitive, which can cause some issues.

Choosing IT management software for your business

Many IT professionals are feeling burned out due to the increased demands COVID-19 has placed on them. Choose an IT management software for your business to optimize their workload and allow them to focus on important processes rather than repetitive tasks. If you find your IT staff is feeling that burnout, you should look for a program with a focus on automation and then take into consideration other factors you may need, like security tools and budgetary restrictions. No matter what you decide, you’re looking for a tool that’s going to streamline your IT department, so make sure you don’t choose one that’s going to create more work for them in the long run.

Netsuite also automates some of your repetitive backend processes, so your IT team doesn’t have to spend extra time tending to them. Additionally, the software prioritizes tasks to tell your IT team where they should place most of their energy. It also ensures that those processes are visible across different departments to keep everyone on the same page about which tasks are being given priority.

Netsuite also automates some of your repetitive backend processes, so your IT team doesn’t have to spend extra time tending to them. Additionally, the software prioritizes tasks to tell your IT team where they should place most of their energy. It also ensures that those processes are visible across different departments to keep everyone on the same page about which tasks are being given priority. Additionally, Freshdesk offers an AI chat option, so your customers and employees help themselves and reduce the time your agents have to spend with them. Their Freddy AI solution delivers automatic and precise answers to questions for faster resolutions. In the knowledge base, Freshdesk provides automatic suggestions to help clients and team members find answers faster.

Additionally, Freshdesk offers an AI chat option, so your customers and employees help themselves and reduce the time your agents have to spend with them. Their Freddy AI solution delivers automatic and precise answers to questions for faster resolutions. In the knowledge base, Freshdesk provides automatic suggestions to help clients and team members find answers faster.