The difference between creating a data lake and a swamp comes down to how well data moves through the ecosystem. It’s not enough to simply dump data into a central repository. An IT organization needs to be able to build a pipeline for managing the flow of data into and out of the lake.

With that issue in mind, Syncsort today unveiled DMX-h, a framework for managing workflow around Big Data repositories that provides the added benefit of giving organizations a means to centrally manage multiple Big Data repositories.

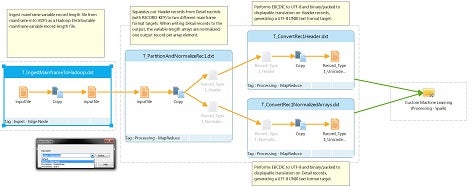

Tendü Yoğurtçu, general manager of Big Data business for Syncsort, says DMX-h makes use of new and traditional extract, transform and load (ETL) technologies to enable organizations to create workflows spanning multiple versions of Hadoop and the Apache Spark in-memory computing platform. That’s important, says Yoğurtçu, because Apache Spark 2.0, for example, has a completely different set of application programming interfaces (APIs) than the first version. As Big Data technologies evolve, Yoğurtçu says, it’s clear organizations will need to be able to develop workflows capable of spanning multiple Big Data platforms that can easily absorb innovations across a suite of technologies that is still rapidly evolving.

“There really shouldn’t be a need to upgrade everything all at once,” says Yoğurtçu.

Most organizations are still trying to develop a set of processes for maximizing the value of data. They generally have a handle on the underlying Big Data platforms. The next big challenge is finding a way to make sure the data that flows in and out of the platform is the most current and relevant data available. In fact, to address the data quality aspect of the equation, Syncsort acquired Trillium Software from Harte Hanks late last year.

Of course, part of the irony here is that the Big Data community is relearning some of the ETL lessons that organizations that have deployed mainframes to process large amounts of data have known for decades. The challenge is figuring out how to apply many of those same concepts across a distributed Big Data environment that in far too many instances is choking on stagnant data.